Reflecting on the question how engineering practices can best be embedded into Agile and Design Thinking methods, a thought crept into my mind that would not go away. It is key to have skilled professionals on the team. If only the right set of personas would be identified and elaborated, that would lead to awareness and provide justification to attract these professionals. And as a result quality-of-service (non-functional) aspects could be better positioned in Agile methods.

Agile and Design Thinking methods are based on a deep understanding of end user groups. Through the creation of imaginary archetypical ‘personas’ these methods offer a structured approach to extensively research and document the behaviors, the experiences, beliefs and painpoints of groups of end users. The goal is to come up with a solution that is usable and meaningful for the target user groups.

As these archetypical end users mostly represent consumer roles or roles that directly support the business it is only natural that their behaviors, experiences and painpoints relate to business functionalities. These business functionalities are captured in the WHAT part of user stories.

To illustrate my point I will re-introduce the famous AMGRO (Amalgamated Grocers) case study, wellknown to generations of IBM architects. Let’s assume that after having successfully exploited their web portal on traditional infrastructure, AMGRO is now moving their shopping portal to the cloud and that they are making this move in an Agile manner and just completed a series of Design Thinking workshops.

An example of a user story created in the AMGRO cloud migration program is given below. As you can see it adheres to the WHO, WHAT, WOW paradigm :

“It should take an AMGRO online shopper no more than 10 minutes to complete a purchase and to receive confirmation of their purchase through the new cloud-based web portal”

- WHO = an AMGRO online shopper

- WHAT = complete a purchase and receive a confirmation of their purchase through the new cloud-based web portal

- WOW= no more than 10 minutes

The only place in WHO-WHAT-WOW user stories to document quality-of-service aspects, such as performance, is in the WOW part. Note that to make this particular user story ‘SMART’ the meaning of ‘a purchase’ will have to be clearly defined! A good addition could be ‘consisting of 10 items’. And this user story can be made even more compelling by adding ‘and shoppers must be able to access the portal 24×7 from PC as well as mobile devices’.

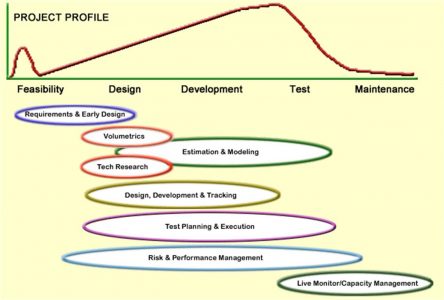

So Design Thinking at least offers a way to document end user requirements for performance. It does not give any guidance how to achieve the WOW effect aimed at by these requirements. Thus to ensure that performance (and the same is true for other quality-of-service aspects) is given the attention that it deserves in an Agile program we will need the Agile PEMMX approach that Phil Hunt has introduced in his blog. But WHO in the Agile program will be accountable and responsible to drive that approach?

Let’s see if we can identify personas for those stakeholder groups that are directly affected by failing quality-of-service and for the professionals who are responsible for preventing that that happens. The relevant questions are :

- Who, apart from the online shopper, will have a problem when purchases cannot be completed and confirmed within 10 minutes? In other words ‘who feels the pain when the web portal does not perform?’ The answer is that there must be a product owner for the web portal and that they will probably be called when there is an outage or performance is unacceptably slow.

- Whose responsibility is it to design the web portal in such a way that it meets its performance targets? In other words ‘who will be called by the product owner to fix the performance issues?’ The answer is that the performance engineer is the most likely person to be woken up in case of problems. But the performance engineer cannot do this on her own. She needs a team of skilled specialists to come up with tuning options.

- Whose responsibility is it to test that the design meets the performance requirements? In other words ‘who wil check that the tuning options that the performance engineer came up with with her team actually work?’ The answer is that the performance test manager will take care of that. And probably he gets help from one or two testers.

- Who looks after the product’s performance once it has gone life? In other words ‘who will see to it that the optimized solution will stay optimized once it went live?’ The answer is that the capacity manager will monitor performance and capacity in production.

We now have defined four key ‘performance roles’. In large programmes there could be additional supporting roles, but these four roles are sufficiently instructive to support our target. In subsequent blogs we will dive deeper into these performance personas and give them a name and a face. So stay tuned!